Over two decades ago, Salesforce pioneered the first multitenant cloud platform, setting a precedent in the industry. Since then, Salesforce has evolved into a comprehensive enterprise platform, capable of encapsulating and automating key aspects of a business, and serving hundreds of thousands of businesses and millions of users in various industries and regions. Salesforce has also enhanced its Customer360 product suite through strategic acquisitions.

Over the last several years, shifts in the market, the industry, and the technology landscape have led us to a number of deep transformations in the foundational Salesforce Platform. These include:

- The emergence of public cloud providers who invest heavily in infrastructure.

- Rapid advancements in AI, including machine learning, generative AI, and agentic experiences.

- Increased data residency and regulatory requirements across industries and countries.

- The need for handling real-time data and transactions at a rapidly increasing scale.

- Increased focus on requirements for cybersecurity, system availability, performance, and resilience.

- Customer demand for an integrated suite that offers a highly resilient, loosely coupled, and strongly coherent architecture.

In response to these changes, particularly the seismic shift of AI and its impact on businesses, Salesforce has completely transformed its platform from the ground up, laying the groundwork for the next generation of applications and customer use cases, all while upholding our trust goals.

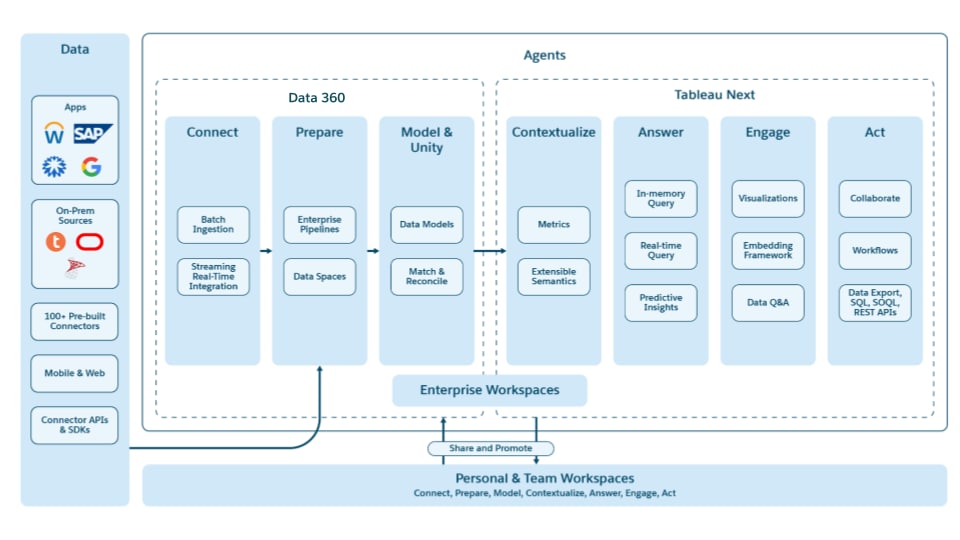

The launch of Agentforce at Dreamforce 2024 and the diagram below represents the culmination of this extensive effort, involving thousands of Salesforce Technology and Product organization team members. Currently, more than 95% of our customers have transitioned to this new platform. The successful migration of a majority of our customers, including those with the most demanding workloads, underscores the ingenuity of our engineers and reaffirms Salesforce’s core values of Trust, Customer Success, and Innovation.

Since the launch of Agentforce, Salesforce has continued to pioneer the use of AI in enterprise applications and has been a market leader in developing agentic experiences that provide real-time, conversational experiences for existing and new business capabilities.

In this white paper, crafted in collaboration with top engineers, a detailed exploration is provided for builders who appreciate the complexities behind major technological transformations. The paper delves into the essential architectural enhancements that keep the platform scalable, secure, and ready to handle future applications while meeting the changing needs of our customers. It’s recommended that you begin with the Architecture Overview chapter to understand the full picture. From there, readers can either continue in sequence or explore the chapters that capture their interest the most.

Emin Gerba

Chief Architect, Salesforce

The architectural principles of the Salesforce Platform below capture the foundation and differentiation for how we engineer features and capabilities:

- Enterprise-Grade Trust: Trust is Salesforce's #1 value, and we prioritize not only the availability and security of our services, but also build the access control, compliance and security features so our customers can meet their compliance and security standards with the Salesforce Platform.

- Multitenant: All services and infrastructure are built to host multiple customers. This provides a strategic pattern for scaling with use, as well as standardizing on a common high-bar of availability and security regardless of the size of our customers.

- Metadata-Driven: Metadata is at the heart of how our multitenant services are customizable. Our metadata is extensible so that admins and developers can build upon existing work, as well as benefit from future product updates from Salesforce and ecosystem partners.

- API First: The Salesforce Platform prioritizes a rich and coherent API portfolio that covers everything that can be done via Salesforce-native user interfaces. This allows developers and partners to leverage and re-compose the platform’s functionality for integrating systems or building new user experiences.

- Open and Interoperable: The Salesforce Platform can be integrated into any of our customers’ enterprise architectures. We designed the Salesforce Platform to work with other cloud-based and on-prem systems, as well as provide APIs, tools, and integration standards for external systems to integrate with the Salesforce Platform.

- Agentic: The Salesforce Platform is rapidly evolving to be agent-first across the entire application suite. We want users to be able to engage with Salesforce through deep agentic conversational experiences that allow them to get work done and interact with their data in increasingly natural ways.

The current Salesforce Platform represents the latest stage in the evolution of Salesforce’s capabilities since the 2008 debut of the Force.com Platform. Recent key transformations include:

- Adoption of Hyperforce and a shift to cloud-based architectures.

- Evolution from a monolithic architecture to a structure with independent services.

- Introduction of Data 360 and Lakehouse technologies alongside traditional relational data stores.

- Deep integration of AI technologies, generative and machine learning, and an evolution to agentic experiences across the platform.

These changes have expanded and refined the platform’s capabilities without significant disruptions, thanks to robust abstractions that allow Salesforce engineers to advance our technologies seamlessly with minimal customer disruption. The robust abstraction also continues to be key to the Salesforce Platform’s value of simplifying the technical complexities of enterprise-grade software, like security, availability, and technology conventions, so app developers can focus on solving their unique challenges. The Salesforce Platform’s capabilities are highlighted below:

The Salesforce Platform is shown as a set of layers that make up the system. Each layer represents a group of related features that are important to applications built on the platform. The sub-boxes within each layer provide illustrative examples of these capabilities. Each lower layer’s capabilities are integrated into all the layers above, ensuring a consistent and coherent experience across the entire Salesforce application suite.

The Salesforce Platform embodies extensive engineering transformations across all layers of a mature technology platform developed over the past 20 years. Driven by evolving customer demands and new technologies, these changes enable support for new app types and solutions. The transformations are interconnected, with changes in lower layers influencing the evolution of all subsequent layers above.

The Salesforce Platform is structured into several layers, each contributing to its comprehensive capabilities:

- Hyperforce: The foundational infrastructure has evolved from first-party data centers to public cloud providers, enhanced with Salesforce technologies for secure, compliant, highly-available, and cost-efficient hosting.

- Metadata Framework: Provides a stable abstraction for apps to build on, even as the technologies we have and use evolve. Includes an object-relational mapper, prescriptive order of execution, and a “core” runtime that bridges the metadata definitions with the metadata-driven runtimes.

- Data: Includes a multitenant relational database and a petabyte-scale Lakehouse for managing Salesforce and non-Salesforce data, supporting unstructured data and content management, advanced search, governance, and analytical processing capabilities.

- AI: Builds on the data layer with foundational, trusted AI technologies that leverage predictive and generative AI to power agentic experiences.

- App Platform Services: Provides tools for IT admins, developers, and vendors to build and customize applications, offering an opinionated abstraction to simplify common and complex tasks.

- Business Capabilities: Offers a range of capabilities to meet diverse business needs, allowing developers to tailor applications as needed.

- APIs and API Management: Ensures all platform capabilities are accessible through well-formed APIs, facilitating service and layer interdependencies.

- User and Developer Experience: Features user-friendly interfaces for end-users and a range of development tools from low-code to pro-code for application development and customization, with support for modern AI-driven development.

- Integration: Integrates with any enterprise architecture, enabling compatibility with Salesforce and non-Salesforce systems through data connectors, zero-copy data integration and other tools.

- Apps and Industries: Provides a suite of customizable apps and industry-specific solutions built on the platform’s integrated capabilities, leveraging the full range of lower-layer functionalities, and with deeply integrated AI agents.

Salesforce has been developing global data center infrastructure for nearly 25 years, predating many current hyperscalers and IaaS vendors. Hyperforce, the current generation of Salesforce’s infrastructure evolution, is designed to operate across multiple public cloud providers worldwide.

It’s tailored to meet customer needs for elastic B2C scale, global data residency, enhanced availability, top-tier security, and regulatory compliance. Hyperforce standardizes infrastructure across all Salesforce products, facilitating rapid integration of new acquisitions.

Hyperforce ensures delivery of the Salesforce Platform, allowing for swift deployment of new features and applications, meeting data residency and regulatory compliance requirements in more than 20 regions across the world.

During Salesforce’s transition to Hyperforce, significant differences in services, interfaces, and compliance levels among hyperscalers were identified. To build a robust and portable foundation for the Salesforce Platform, these architectural principles were adopted:

- Infrastructure as Code: Utilizing a domain-driven architecture, this principle involves declarative coding for infrastructure, creating immutable artifacts, and automating infrastructure on-demand using standards like Kubernetes and Service Mesh.

- Zero-Trust Security: Implementing a zero-trust security model with comprehensive defense strategies including identity management, authentication, authorization, network isolation, least privilege security policies, and encryption of data both in transit and at rest.

- Managed Services: Emphasizing the use of multitenant and multi-cloud services, this principle enhances portability across different infrastructures and environments such as commercial, government, and air-gapped systems.

- Built-in Resilience: Mission-critical services are spread across multiple Availability Zones to ensure high availability. Data is replicated across Availability regions. Services are also labeled with availability tiering to manage service level objectives and resilience planning.

- Fully Observable: Integration of all services into a standard observability platform for efficient monitoring, which includes log collection, metrics gathering, alerting, distributed tracing, and tracking of service operations like traffic volume, error rates, and resource utilization.

- Automated Operations: This includes automated management of infrastructure lifecycle and predictive AIOps (AI for operations) for maintaining quality of service, detecting, and addressing service degradations, and failure detection.

- Automated Scale: Focusing on scalability and cost-efficiency, this principle allows for operational flexibility across different scales without increasing operational risks, abstracting specific account limits related to the cloud provider.

- FinOps Aware: Public cloud brings infrastructure agility, but with the risk of elevated costs. We embrace an efficiency-driven engineering culture throughout the service lifecycle, without compromising on availability, security, and customer trust.

These principles guide the development and operation of Salesforce’s Hyperforce platform, ensuring it remains adaptable, secure, and efficient across various environments.

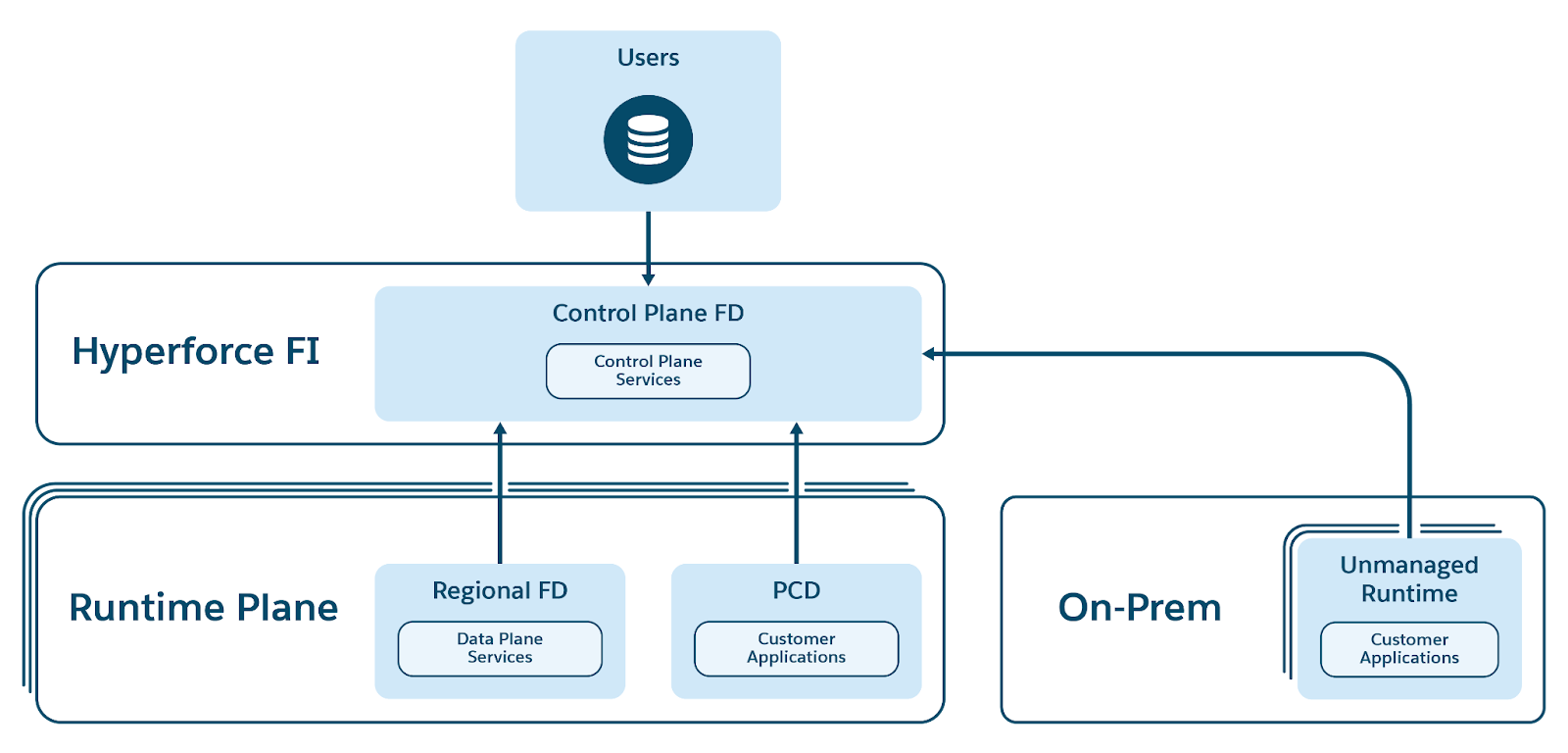

The Salesforce Platform and its supporting services run on the Hyperforce Foundation, which comprises multiple Hyperforce Instances. These instances are strategically distributed across various countries to align with customer preferences for geography and availability. To meet stringent data residency and operational requirements, one or more Hyperforce Instances can be optionally grouped and designated as an Operating Zone. Each instance is regularly updated to ensure safety, scalability, and compliance with local and legal standards.

Hyperforce Instances are made up of several Hyperforce Functional Domain instances, which are clusters of services delivering specific functionalities. Foundational functional domains provide critical services like security, authentication, logging, and monitoring, all of which are essential for other Hyperforce services. Business functional domains support various Salesforce products such as Sales Cloud, Service Cloud, and others, facilitating their product functionality.

Services within a Functional Domain may be organized into Cells, which are scalable and repeatable units of service delivery. The Hyperforce Cell corresponds to what is traditionally known as a "Salesforce instance" wherein one or more Salesforce organizations (org) reside. A cell is a scale unit as well as a strong blast radius boundary. Supercells provide a logical grouping of multiple cells to demarcate a larger blast radius due to shared services across cells. Multiple Supercells may be present in a Functional Domain. Cells and Supercells allow Hyperforce to scale horizontally within a functional domain while also maintaining strong control over the size of the blast radius.

Each Hyperforce Instance is mapped to one Availability Region, a concept found in all public cloud infrastructures, and is capable of operating independently of all other Hyperforce Instances. All mission-critical services and data in the Hyperforce Instance are distributed and replicated across at least three Availability Zones, to achieve fault tolerance and stability. Furthermore, data backups are copied to other suitable Hyperforce Instances for business continuity and regulatory compliance.

Hyperforce infrastructure is continually evolving, as new Hyperforce Instances and Cells are created or refreshed in place. Customers are insulated from changes in the physical details of Hyperforce. All externally visible customer endpoints are accessed via stable and secure Salesforce My Domains (for example, acme.my.salesforce.com) that securely route traffic to the current data and service location. Outbound traffic (e.g., Mail, Web callouts) are best implemented using secure mechanisms like Domain Keys Identified Mail (DKIM) and mTLS, to ensure that customers’ on-premise infrastructure isn’t hardcoding the physical detail of Salesforce infrastructure, such as IP addresses that can change over time.

Hyperforce Functional Domains are designed with robust security measures. Each domain is secured at the perimeter and isolated, with services within a domain separated into dedicated accounts for added security. Communication between services is facilitated securely via Service Mesh or similar protocols. Traffic management is handled by ingress and egress gateways that inspect, route, and apply necessary controls like circuit breakers or rate limits to all incoming and outgoing traffic.

Services within a Hyperforce Functional Domain are grouped into Security Groups, with only those in the edge group exposed to the public internet. Runtime security policies enforce communication rules between different security groups, adhering to the principle of least privilege to ensure services have only the necessary access.

Each geographical region has a Hyperforce Edge Functional Domain that terminates transport layer security and employs programmable web application firewall policies to preemptively address threats. This ensures that only legitimate traffic reaches Hyperforce endpoints while maintaining a secure and efficient customer experience. Additionally, internal network links between Hyperforce Instances are tightly controlled, and all log data containing personally identifiable information is anonymized to comply with GDPR standards.

A Hyperforce grid comprises multiple Hyperforce Instances sharing the same control plane, that is designed to isolate sensitive workloads where appropriate. It ensures zero leakage of any customer or system data, platform metadata, or monitoring data across grids. The Control Plane consists of redundant Hyperforce Instances that host essential services for creating, managing, and monitoring customer-facing Hyperforce Instances.

Service and infrastructure code for all Hyperforce services is securely developed within a dedicated control plane functional domain, utilizing source code management, continuous integration, testing, and artifact building services. The generated code is scanned for threats and vulnerabilities before it is packaged into standardized, digitally signed containers and stored in image registries. Code deployment is handled by authorized pipelines in the Hyperforce Continuous Delivery system, with deployment privileges restricted to authorized teams and operators. An Airgapped Control Plane handles additional safeguards necessary in such environments.

Identity and Access Management (IAM) services enforce just-in-time approval to limit access duration and actions, while audit trails monitor all activity, feeding into real-time detection systems to identify and alert on any suspicious activities.

As Salesforce transitions its services to Hyperforce on public clouds from its first-party data centers, it’s crucial to revamp our budget creation, cost visualization, and resource optimization strategies.

Our cost management approach isn’t just about cutting costs; it’s a strategic process that differentiates between products aimed at growth versus those that are stable. It plans for consumption-based pricing and margins that uphold product availability, aligning with our core value of Trust. Public cloud accounts are organized hierarchically and linked to specific products and executives. Detailed service-level resource tagging, enriched with organizational metadata, helps pinpoint costs for individual microservices. Tools like Tableau and Slack, along with advanced forecasting tools, are employed to provide executives and teams with real-time data on costs, forecasts, and budget analyses, instilling confidence in future financial planning.

To ensure optimal cost management, Salesforce employs a mix of Compute Savings Plans, Spot Capacity, and On-Demand Capacity Reservations (ODCR), guaranteeing the necessary capacity. These reservations are managed through advanced time-series forecasting and custom dashboards, allowing for human oversight and decision-making. Setting achievable goals on unit transactional cost reductions (the cost to process a defined volume of business transactions) is an effective strategy to drive improvements. The Hyperforce Unit Cost Explorer tool enables teams to analyze and manage unit cost trends, attributing costs to specific services, and identifying new improvement opportunities. The Salesforce Cloud Optimization Index, or “COIN” score, assesses services against a dynamic list of savings opportunities, motivating service teams to maintain optimal resource efficiency.

In our unwavering commitment to Sustainability, we actively pursue reductions in our carbon footprint, setting specific targets to decrease our unit Carbon to Serve, a measure of emissions relative to work performed.

Security and availability are crucial foundational aspects of our enterprise-grade platform, essential for maintaining customer trust. At Salesforce, these controls are integral to the Salesforce Platform, automatically enforced through shared services and software frameworks. This built-in approach ensures that individual systems benefit without requiring additional effort.

Managing and continuously enhancing this extensive array of security and availability controls across thousands of services and hundreds of teams presents a significant challenge. However, it’s crucial, as overlooking even a minor detail can result in a security breach or system outage.

Hyperforce is a secure and compliant infrastructure platform that supports the development and deployment of services with advanced security features. It offers strong access control, data encryption, and compliance with security standards. Salesforce adheres to over 40 security and compliance standards such as PCI/DSS, GDPR, HIPAA, FedRamp, and more.

Key security principles include Zero Trust Architecture (ZTA) and end-to-end encryption, ensuring the protection of customer data across all processing stages. Salesforce adheres to security standards and best practices from the secure software development lifecycle to production operations, as well as robust application-level security practices to mitigate potential threats.

The ZTA cybersecurity paradigm ensures that all users, devices, and service connections undergo authentication, authorization, and continuous validation, regardless of location. ZTA and Public Key Infrastructure (PKI) are essential for modern cybersecurity, establishing trust boundaries and secure communication without relying on perimeter security.

However, PKI deployments often overlook the importance of certificate revocation and governance over root certificate authorities. Salesforce’s implementation of certificate revocation is robust and scalable, supporting end-to-end PKI security.

Additionally, Hyperforce enforces ZTA through mutual transport layer security between services, using short-lived private keys and just-in-time access for users with role-based access control.

The Salesforce Platform ensures the protection of data in transit by using TLS with perfect forward secrecy cipher suites, which secures data as it travels across the network between user devices and Salesforce services, as well as within the Salesforce infrastructure domains.

For data at rest, the Salesforce Platform employs a key management system supported by hardware security modules. In its multitenant platform, each tenant is assigned a unique encryption key, preventing any crossover of keys between tenants.

The security of communication and encryption is heavily dependent on entropy for generating keys or random data. Recognizing the vulnerability of cryptographic protocols to attacks due to predictable key generation, the Salesforce Platform mitigates this risk by sourcing entropy from multiple origins for all key generation processes. We leverage the memory encryption feature available in various processors, enabled by a cloud service provider, to enhance protection against cold boot attacks.

Salesforce has a customized JDK to meet many compliance standards, such as Federal Information Processing Standard (FIPS), simplifying the process for developers and operators by eliminating the need for them to undertake compliance work themselves. This customization not only helps prevent risks such as XML external entity injection (XXE) but also enhances Salesforce’s cryptography agility and ability to interchange cryptography strategies as needed. It allows the transformation of non-compliant code—whether developed internally or sourced from open repositories—into FIPS-compliant code without necessitating a complete rewrite, thus reducing the workload on development teams and maintaining adherence to secure-by-default design principles.

Additionally, Salesforce has incorporated frameworks to counter vulnerabilities like cross-site scripting (XSS), request forgery (CSRF), and SQL injection by integrating protective measures into the Secure Software Development Lifecycle (SSDL).

A centralized secrets management system, reinforced by role-based access controls (RBAC), is implemented to secure both services and user access. Furthermore, code scanning tools are employed to prevent the accidental exposure of secrets in production environments through source code management systems.

Phishing remains a significant threat to organizations, leading Salesforce to implement phishing-resistant multi-factor authentication (MFA) in accordance with a number of industry best practices, including CISA (Cybersecurity and Infrastructure Security Agency) Zero Trust principles. This includes hardware-backed keys for employees with production access and a secure kernel for controlled access to cloud service provider accounts.

To maintain a robust security posture, Salesforce has standardized security controls and integrated cloud-native security services into Hyperforce, providing enhanced visibility, threat detection, and policy enforcement. A comprehensive security information and event management system is in place for real-time monitoring, alerting, and reporting, which is supported by a thorough vulnerability management program and cloud security posture management tools to continuously identify, assess, and remediate vulnerabilities.

Additionally, a web application firewall filters and monitors HTTP traffic to protect against various attacks, and a range of network security tools including firewalls, intrusion detection and prevention systems, virtual private networks, and endpoint detection and response agents are utilized to provide continuous monitoring and threat detection. Network segmentation and micro-segmentation are implemented to minimize the attack surface and contain potential breaches.

Salesforce has also developed and implemented a robust incident response plan tailored to the unique challenges of Hyperforce, featuring predefined procedures for identifying, containing, and mitigating security incidents, ensuring a rapid and effective response to potential security threats.

Salesforce manages mission-critical customer workloads that demand high availability. Our strategy for high availability includes various organizational facets such as our service ownership model, incident management, and operational reviews. Key technical elements of our strategy include our monitoring architecture, AI-driven operations automation, and automated safety mechanisms for production changes.

To consistently achieve high availability across thousands of services, a three-step approach manages technical risks at scale.

First, availability architecture standards are established, defining best practices such as:

- Redundancy with automated failover. To handle the constant failures that a large cloud-based system encounters, Salesforce builds its services with a high level of redundancy, fully automated failure detection, and seamless automated recovery for both complete and partial failures.

- Limit blast radius. Failures will happen, and so the team designs all of its services with intentional blast radius maximums to cap the impact of failures. The most classic and visible example is that of the Hyperforce Cell (fka Pod).

- Compartmentalize failures. Prevents failures spreading and compounding across independent units of the system. Fault-tolerant API calls between services are a key pattern that prevents a cascade of failure across the distributed system. Along the way, the team carefully balances compartmentalization against redundancy.

- Scale automatically. To serve unpredictable load without performance degradation, automatically scale up quickly, and down slowly without relying on slow, fallible human operators, triggered by saturation points of resources such as CPU, memory, or queue depths.

- Fast rollbacks. We set rollback targets in minutes for all services, and automatically test rollbacks in pre-production environments by making roll-forward, back, and forward again a default operation. The team makes extensive use of feature flags for even faster, more granular emergency switches and rollouts.

- Protect all services receiving API calls. Load-shedding, tenant fair limits, web application firewalls, and sophisticated layer seven protections are deployed at all levels of the system, from our outermost perimeter services exposed directly to the internet, down to the team’s deepest most internal services that can be accidentally attacked by bugs in higher-level calling services.

- Soften dependencies. Dependencies between services are designed to be soft wherever possible to allow them to fail or succeed independently. Caching is one of the most common patterns here - often a stale result from a downstream dependency is adequate for continued function.

- Favor async communication. Asynchronous, brokered communication between services decouples those services from each other and buffers load spikes between them.

- Make API calls fault-tolerant. To be tolerant of partial failures and transient network issues, we use several patterns: timeouts and deadlines, circuit breaking, and retries with backoff. We prefer non-blocking calls when possible to limit resource consumption and blocking. Backwards and forwards compatibility is enforced with schema-level linting at build time and integration testing.

- Manage service quotas and constraints. The team sets quotas and constraints across its service fleet, such as IP addresses, disk IOps, or the capacity of a given Kubernetes cluster. The team aggregates, monitors, and alerts against usage of these quotas and constraints centrally to avoid an approaching limit from impacting the system at runtime.

Second, a multi-layered inspection model ensures services meet these standards. This includes automated chaos testing, scanning, and linting for anti-patterns, and architecture reviews with senior architects to catch issues not addressed by automation.

Third, solutions are integrated into Hyperforce to ease adherence to these standards. This includes automatic telemetry collection, default redundancy, and failover mechanisms, and built-in protections like load shedding and DDoS defense, all activated by default for individual services.

Salesforce handles an immense volume of telemetry data, including metrics, logs, events, and traces, which traditional monitoring solutions can’t always manage effectively.

To address this, Salesforce developed a comprehensive observability system that integrates with its software development lifecycle, operations, and support functions. This system provides a unified experience for engineering and customer support teams, while meeting scale needs and reducing licensing costs for third party software.

The metrics infrastructure at Salesforce, built on OpenTSDB and HBase, supports large-scale collection, storage, and real-time querying of time-series data. Non-real-time use cases utilize Trino and Iceberg, handling over 2 billion metrics per minute to provide insights into CPU utilization, memory usage, and request rates. For log management, Salesforce uses Splunk for its powerful indexing and search capabilities. Apache Druid supports real-time ingestion and analysis of large-scale event data, crucial for understanding user interactions and system events. Distributed tracing across microservices is managed with OpenTelemetry and ElasticSearch, aiding in identifying specific latency and failure points.

Salesforce also implemented an Application Performance Monitoring (APM) infrastructure that integrates with its technology stacks for data collection and telemetry stores. This auto-instrumentation of applications simplifies data collection and ensures consistent telemetry across services. APM’s unified dashboard correlates various data types, enhancing the ability for engineers to monitor performance, diagnose issues, and optimize systems through a cohesive interface.

By standardizing observability tools, Salesforce links disparate telemetry types across services using distributed tracing. This creates a comprehensive service dependency graph, visualizing the entire service ecosystem and tracing requests with fine granularity. This capability is crucial for pinpointing issues, identifying bottlenecks, and supporting AI-driven features like anomaly detection, predictive analytics, and automated remediation.

To increase incident resolution times, we’ve developed an AI Operations (AIOps) Agent that automatically detects, triages, and remediates incidents on behalf of human operators, with intervention only in a minority of cases. The AIOps Agent is a scalable multi-agent-reactive toolkit designed to facilitate the development of complex, reactive agent-based systems. It’s highly modular and can be enhanced with various tools to extend its functionality. It’s designed to efficiently scale with increasing numbers of agents. Key features include a reactive architecture, enabling agents to dynamically react to changes in their environment; tool enhancement, allowing for easy integration of tools to extend agent capabilities; and a pluggable planning module, which enables customization of agents’ planning strategies by plugging in different planning modules.

Swift proactive detection is accomplished for 91% (at the time of writing) of our core CRM product incidents with advanced machine learning models from our Merlion library, a publicly available open-source library developed by our AI research team. Merlion is an ensemble of machine learning models like Isolation Forests, Stats, Random Forests, and long short-term memory (LSTM) neural networks that process the extensive telemetry data generated by our systems in near real-time.

79% of incidents (at the time of writing) are automatically resolved by the agent’s actions. Our AIOps Agent can process and triage data vectors such as logs, profiling, diagnostics, time series, and service-specific artifacts to recommend remediation actions. The AIOps Agent controller and planner choose an agent with specific skills to perform actions in production.

For the remaining incidents that require human involvement, the AIOps Agent efficiently triages unresolved issues to the appropriate service teams. It does so by intelligently understanding the nature and context of each incident using the in-house fine-tuned model XGenOps, which is trained on operational datasets like problem records, incidents, JFRs, and logs, ensuring that it is directed to the team with the necessary expertise. This results in over 2800 hours of engineering time saved per week, mitigating the need for engineers to triage unresolved issues.

To manage the risk of outages from nearly 250,000 production changes made weekly, fully automated deployment systems are used to enforce safe change practices, eliminating human error. Off-the-shelf systems weren’t scalable or customizable enough, prompting the development of more tailored solutions.

The custom continuous deployment system ensures safety through multiple layers, following industry-standard blue/green deployment strategies:

- Mandatory testing evidence for each change.

- Initial canary testing of changes.

- Staggered deployment with controlled blast radius.

- Soaking and health checks between deployment stages.

- Mitigating conflict with existing moratoriums and incidents.

Additionally, continuous integration systems have been optimized to run millions of AI-selected tests, enabling rapid releases while minimizing regression risks.

The core architectural principle of the Salesforce Platform is its metadata-driven design. Salesforce engineers create multitenant services and data stores. Each application on the platform is essentially a collection of metadata that tailors how these multitenant services are utilized by individual customers. That’s why a common marketing phrase for the Salesforce Platform is that “everything is accessed with metadata”.

The platform emphasizes structured and strongly-typed metadata. This metadata serves as an abstraction layer between the customer experience and the underlying Salesforce infrastructure and implementations. This approach enhances both the usability and quality of applications. For instance, instead of using SQL schema definitions and queries, customers interact with structured metadata like entities, fields, and records via Salesforce Object (sObject) APIs. This design allows the platform to integrate new data storage technologies or modify existing ones without necessitating application rewrites, thereby supporting continuous development best practices.

The Salesforce Platform architecture features a “layered extension” approach that supports four key personas in building and extending apps:

- Salesforce Engineering: Teams develop native apps like Sales Cloud and Service Cloud, which are deployed across all services and runtimes through an extensive release process. These apps are made available to all tenants through licensing and provisioning mechanisms.

- External Partners: Independent Software Vendors (ISVs) and other partners can extend the metadata created by Salesforce to build value-added solutions, such as schema extensions on Sales Cloud data models or additional validation rules for Service Cloud case records. They can package these solutions for distribution to multiple customers.

- Organization-specific IT Admins and Developers: They can customize their applications beyond what ISVs offer, tailoring solutions to meet unique business challenges like proprietary or region-specific processes.

- Individual End Users: End users can personalize their app experience, such as changing the column order in a list view or setting a default tab.

Each persona can independently iterate on the same application by ensuring that lower layers don’t depend on changes of personas in the higher layers and by upholding strong versioning and backward compatibility contracts.

One feature that highlights the “layered extension” concept is the Record Save Order of Execution, which ensures that business logic from all four layers is applied in a predictable sequence. This allows more specific, higher-layered business logic determined by the org admin or IT developer to appropriately override lower-layered logic during record saving that might be provided by Salesforce or an external partner.

Additionally, the platform’s metadata frameworks utilizes a “Core” runtime and a proprietary Object-Relational Mapper (ORM) with multitenancy built-in, connected to a relational database. This Core runtime enables shared memory state, referential integrity validations, and transactional commits, which prioritizes app stability and enhances the reliability of app deployments. The architecture has been continually evolving to support the growing scale of application complexity. For example, as of October 2025, there are over 85,000 entities defined by Salesforce, and over 300 million custom entities defined by our customers.

Historically, the Core runtime hosted the majority of platform and app functionality. The current architecture of the Salesforce Platform now includes hundreds of independent, metadata-driven services. The Core runtime remains the single system of record for application metadata, leveraging the unique benefits of a monolithic architecture for metadata management. The relevant metadata is synchronized to local caches in independent services, powering the diverse array of scalable services for application runtimes.

Data is an essential asset for organizations, and Hyperforce provides a reliable foundation for its storage at Salesforce. The key challenge is to store data in a manner that optimizes its utility for applications. The Salesforce Platform has transformed the data layer by accommodating various storage and access requirements. It effectively balances costs, read/write speeds, storage capacity, and data types to meet diverse needs.

As AI and analytics increasingly shape enterprise applications, data has emerged as a pivotal element. Its importance lies in its ability to enable AI and analytics to learn, analyze, make decisions, and automate processes.

Data originates in System of Record (SOR) databases, fulfilling the operational requirements of businesses. It then transitions through various transformations to big data platforms, which are essential for powering AI and analytics-driven applications.

Effective management of data, from transactional information to analytical insights, is crucial for extracting value and supporting sophisticated applications. Salesforce Database (SalesforceDB) stands out as a premier transactional database for managing SOR data, while Data 360 serves as a robust big data platform that enhances AI and analytics capabilities.

Transactional data and metadata is essential to the Salesforce Platform. SalesforceDB is a modern, cloud-native relational database designed specifically for Salesforce’s multitenant workloads, similar to other cloud databases from major providers but with custom features for Salesforce’s architecture. It extends PostgreSQL, separates compute and storage, and leverages Kubernetes and cloud storage, enhancing operations with tenant-specific functionalities like encryption and sandboxes.

SalesforceDB handles all transactional CRM data, upwards of 1.1 trillion transactions per month, as well as metadata for Data 360 and related services. Its primary objectives are to ensure trust through durability, availability, performance, and security; scale for large customers; and facilitate simplified, reliable cloud operations. It achieves these goals with a design that separates compute and storage layers, an immutable, distributed storage system, and log-structured merge tree data access. This enables advanced features like per-tenant encryption of data in storage and efficient sandboxes and migrations.

The SalesforceDB service architecture runs across three availability zones, with compute and storage replicated across these zones to ensure the system remains available even if any node or entire zone is lost. All services run in Kubernetes to enable automated failure recovery and service deployments.

To provide high levels of durability and availability, the ultimate system of record for SalesforceDB is cloud storage like AWS’s S3. Operations such as archiving and cross-region replication are managed at this cloud storage level. Storage objects are immutable, enhancing data distribution and replication for high availability.

Due to high latency in cloud storage, SalesforceDB uses storage caches to access data. These caches are distributed storage systems that maintain temporary copies of storage objects in a cluster of nodes, ensuring replication and durability as needed by the database. Separate caches are used for transaction log storage and data file storage.

The SQL compute tier consists of a primary database cluster and two standby clusters in three different availability zones. The primary cluster handles all database modifications, while the standby clusters only handle query operations.

SalesforceDB utilizes a log-structured merge tree (LSM) data structure, where changes are initially recorded in a transaction log and accumulated in memory. The committed changes are then collectively written out into key-ordered data files, which are periodically merged and compacted to optimize storage efficiency.

This structure effectively eliminates concurrent-update issues that are common in databases that update storage directly. By using the LSM approach, SalesforceDB supports critical features such as immutable storage, making it a robust solution for managing Salesforce workloads.

Data in storage is immutable; once data files are written and made visible, they don’t change. Transaction logs are append-only, simplifying data access patterns and enhancing reliability. This structure supports uncoordinated reads, simplifies backups, boosts scalability, and facilitates storage virtualization, making it well-suited for cloud environments.

Transactions in SalesforceDB are committed across multiple availability zones, which ensures that there is no data loss even if a node or zone fails. If a failure occurs, in-flight, transactions are aborted, and committed transactions are successfully recovered. Since failures don’t lose committed data, failover to new nodes is automated.

Cluster management software automatically handles failovers by monitoring quorums and managing ownership transfers. This process isn’t only used in emergencies but also routinely during regular patching, enhancing the system’s reliability through constant use. Short database restarts are typically unnoticed by end users, maintaining a seamless user experience.

Salesforce does three major schema updates per year, with smaller schema updates weekly. SalesforceDB provides zero-downtime schema operations that enable these updates to be done with no customer impact.

Our transactional database serves as the primary repository for customer data, which is cached across multiple availability zones and stored in the cloud. Each data block is secured with an immutable checksum, verified by both the storage layer and the database engine. The database performs lineage tracking to detect any out-of-order changes or missed versions and runs ongoing consistency checks between indexes and base tables.

For ransomware protection, databases are archived in separate storage under a different account, including both full and incremental transaction log backups. These backups are regularly validated through a restoration testing process. Additionally, cloud infrastructure is pre-configured but not activated, ready to manage data restoration requests as needed.

Each Salesforce org is housed in a Hyperforce cell, which includes the SalesforceDB service. This setup allows for rapid global scaling through the creation of new cells via the Hyperforce architecture, and traffic can be easily shifted between cells to manage load. However, as customer workloads and business demands increase, the capacity of a single database instance may be insufficient.

To address this, SalesforceDB employs a horizontal scaling architecture for both its storage and compute tiers. Cloud storage is virtually unlimited, and the cache layers automatically scale to meet demand. Additionally, the compute tier can expand by adding more database compute nodes, which efficiently read from shared immutable storage without needing coordination. This approach allows SalesforceDB to achieve scalability that matches or exceeds that of leading commercial cluster database architectures, without requiring special networking or hardware.

Salesforce is a multitenant application where a single database hosts multiple tenants. Each table record includes a tenant ID to distinguish its ownership, and tenant isolation is maintained through automatic query predicates added by Salesforce’s application layer.

SalesforceDB is tailored to this model, supporting tenant-specific DDL, metadata, and runtime processes, enhancing reliability, performance, and security. It combines the low overhead of a tenant-per-row model with the efficiency of a tenant-per-database schema.

In SalesforceDB, tenant IDs are part of the primary key in multitenant tables, which cluster data by tenant in the LSM data structure, enhancing access efficiency. This setup not only facilitates efficient data access and per-tenant encryption but also simplifies tenant data management. Tenants can be easily copied or moved with minimal metadata adjustments due to the compact metadata structure.

AI, analytics, and data capabilities are essential in modern enterprises. Enterprises already invest in mature big data platforms such as Snowflake, Databricks, BigQuery, and Redshift. However, many

customers are not deriving business value out of their data due to data silos, lack of AI processing, stale data or inaction within an existing business process. Centralizing customer data into a single source of truth, with a single view of customer engagement, is both crucial to a business and challenging due to data fragmentation and the complexity of system management. Salesforce leads in facilitating a holistic view of a customer by integrating data, AI, and CRM into a virtuous circle, driven by generative AI and machine learning insights and powered by data.

SalesforceDB is optimized for high-performance transactional workloads on structured data, whereas AI and analytics workloads require handling large volumes of unstructured data from various sources and performing complex queries and batch processing. To address these needs, Salesforce has developed Data 360, a platform designed to break down data silos, unify, store, and process data securely and efficiently, supporting AI and analytics demands, and enabling real-time enterprise operations.

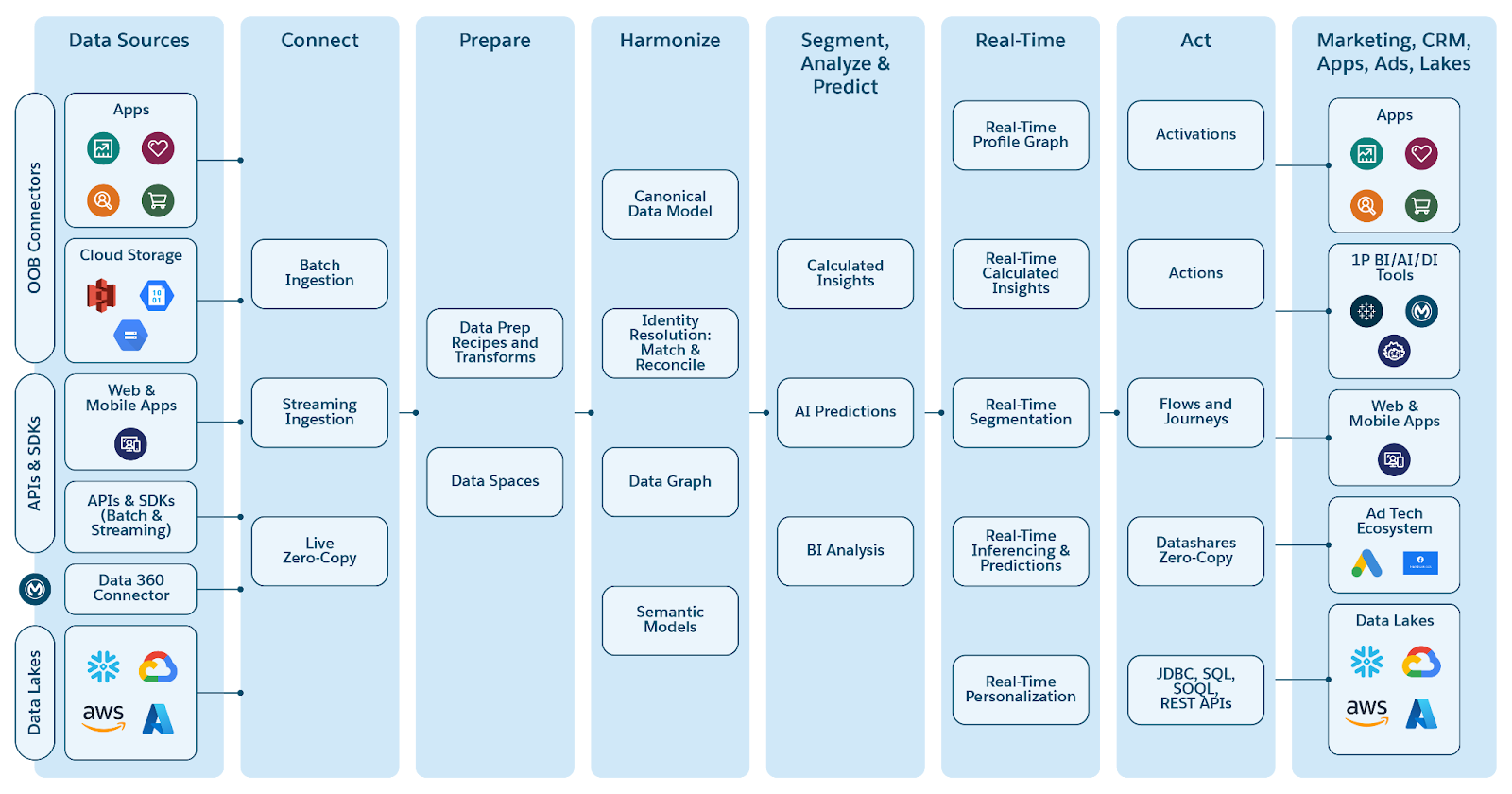

Data 360, built on Hyperforce, serves as the foundational platform for AI and Analytics, offering:

- Integrated infrastructure and a no-code platform to consolidate data silos through connections

- Real and near real-time data ingestion

- Zero-copy federation

- Data cleansing, preparation, and shaping for processing

- Unified Query Service over structured and unstructured data

- Development of analytical and AI/ML models for insight generation

- Data-triggered actions and activations

- Support for generative AI retrieval augmented generation (RAG)

- Comprehensive Policy based Governance

Data 360 Architecture supports a number of components and capabilities, which are outlined below.

Data 360 supports efficient ingestion pipelines from various structured and unstructured data sources, for batch, near real-time, and real-time data processing. Data 360’s ingestion service operates on an Extract-Load-Transform (ELT) pattern, designed for low-latency and suitable for B2C scale. Real-time ingestion includes APIs and interactive streams, while near real-time sources cover detailed product usage. Once ingested, data is extensively transformed to prepare, harmonize (for example, unifying various contact types), and model it for effective querying, analytics, and AI applications. The platform also includes a wide range of ready-to-use harmonized data models.

Data 360 integrates seamlessly with Salesforce applications such as Sales Cloud, Service Cloud, Marketing Cloud, and Commerce Cloud. Additionally, it offers hundreds of connectors for external data sources, ensuring smooth data integration.

Data 360 features a native lakehouse architecture based on Iceberg/Parquet, designed to handle large-scale data management and processing for batch, streaming, and real-time scenarios. This architecture supports both structured and unstructured data, crucial for AI and analytics applications.

In cloud-based data lakes like Azure, AWS, or GCP, the fundamental storage unit is a file, typically organized into folders and hierarchies. This lakehouse enhances this structure by introducing higher-level structural and semantic abstractions to facilitate operations like querying and AI/ML processing. The primary abstraction is a table with metadata that defines its structure and semantics, incorporating elements from open-source projects like Iceberg or Delta Lake, with additional semantic layers added by Data 360.

Abstraction Layers in the lakehouse:

- Parquet File Abstraction: At the base, storage consists of data lake files (for example S3 in AWS or Blob in Azure) in Parquet format. Data for a source table is stored across multiple partitions as Parquet files, with each table being a collection of these files.

- Iceberg Table Abstraction: Tables are organized as folders, with data partitions stored as Parquet files within these folders. Modifications to a partition result in new Parquet files as snapshots. Iceberg manages a metadata file for each table, detailing schemas, partition specs, and snapshots.

- Salesforce Cloud Table Abstraction: Building on Iceberg, this layer adds semantic metadata such as column names and relationships, along with configurations like target file size and compression. It abstracts tables across various platforms like Snowflake and Databricks, shielding Data 360 applications from underlying storage platform specifics.

- Lake Access Library: This library provides access to the Salesforce Cloud Table, handling both data and metadata, and abstracts the underlying storage mechanisms for application developers.

- Big Data Service Abstraction: This includes processing frameworks like Trino and Hyper for querying, and Spark for processing across any cloud table platform.

Data 360 Lakehouse supports B2C scale, real-time ingestion, processing, schema enforcement and evolution, snapshots, and uses open storage formats.

To support real-time analytics and agentic applications, Data 360 augments a lakehouse’s big data storage with an additional Low Latency Store (LLS). Data 360’s real-time processing layer analyzes real-time signals and engagement data in memory. As memory-based storage capacity is limited, all data cannot be processed at once. Data 360 adds this LLS to remove such limitations, enabling scalable real-time processing.

The Low Latency Store is a petabyte scale NVMe (SSD) storage layer on the lakehouse. It is a durable cache – most data eventually makes it to the lakehouse for long term persistence. In-session data in the real-time layer can be flushed to the low latency store for subsequent fast access. For example, in an agentic conversation, recent messages can be processed in memory; older messages can be flushed to the LLS. If a previous conversation is required, it can be accessed within a few milliseconds from the LLS. NVMe-based storage allows for large amounts of data to be stored and accessed at millisecond latencies. Data may make its way to the lakehouse for long-term persistence.

In addition, data from the lakehouse that is required for real time processing or to augment real time experiences is retrieved and kept in the LLS. For example, customer profile context is pre-fetched or brought from the lakehouse and cached in the LLS. Also, any lakehouse objects and other objects that are required for real-time processing during in-session processing can also be cached in the LLS. The LLS enables a real-time layer on a true storage hierarchy with memory, SSD and lakehouse storage layers, with data seamlessly migrating between each.

Data 360 also offers robust support for security, including Tenant-Level Encryption (TLE) with customer-managed keys, as well as privacy and compliance through its governance technologies. At the core is attribute-based access control (ABAC) support, which dynamically evaluates access based on attributes related to entities, operations, and environmental factors. This system supports both discretionary and mandatory access controls.

Complementing ABAC, a detailed data classification system categorizes data by sensitivity and purpose, enhancing compliance, risk management, and incident response. Together, ABAC and this classification system provide comprehensive data governance, ensuring data within Data 360 is managed securely and efficiently.

Data 360 integrates deeply with the Salesforce Platform for metadata, packaging, extensibility, user experience, and application distribution via AppExchange. Customers can define and manage the metadata for lakehouse streams and tables, just like other Salesforce metadata. Every data object (including federated or external tables) is represented as a Salesforce object, and modeled as virtual entities backed by data storage in Data 360. They can be used by developers to build applications on the Salesforce Platform.

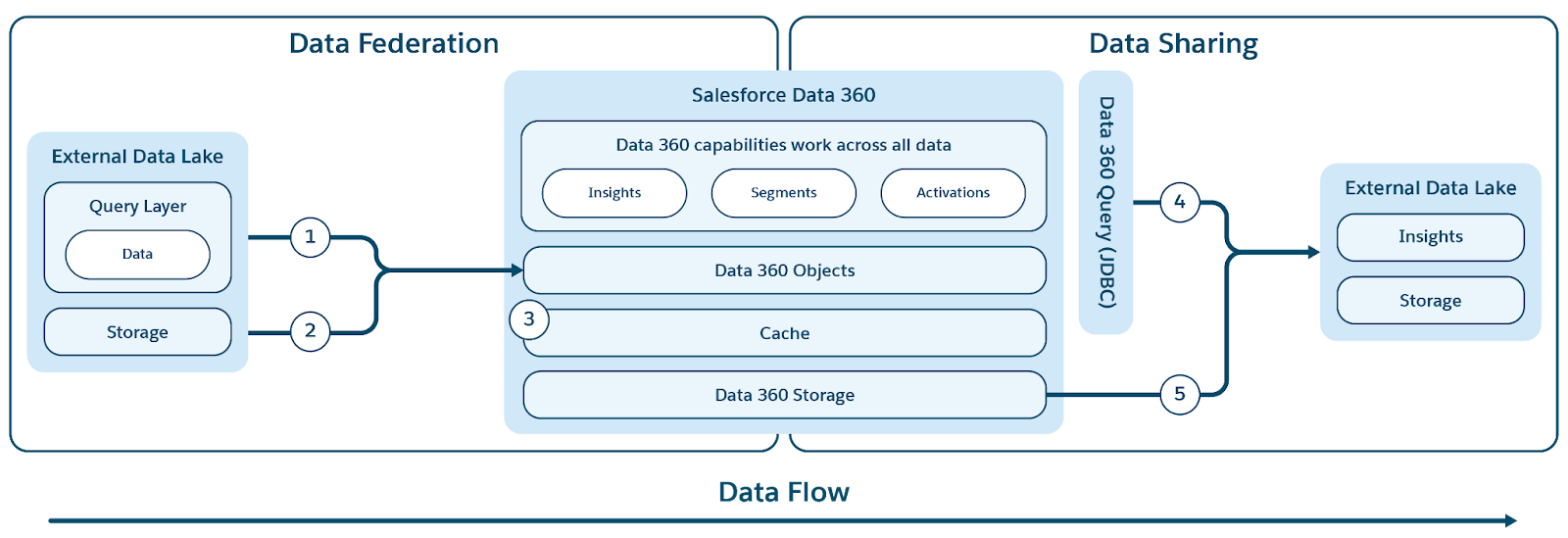

Data 360 offers extensive support for zero-copy federation, allowing users to integrate with external data warehouses like Snowflake and Redshift, lakehouses such as Google BigQuery, Databricks, and Azure Fabric, as well as SQL databases and various file types including Excel. Data 360 supports file and query-based federation, with live query and access acceleration as shown in the figure. Labels (1) and (2) illustrate Data 360’s query (including live query pushdowns) and file-based federation for accessing data from external data lakes/warehouses/data sources; and label (3) highlights acceleration of federated access from external data lakes/data sources. Labels (4) and (5) illustrate query and file-based sharing of data from Data 360 with external data lakes/warehouses. capability also extends to unstructured data sources like Slack and Google Drive, which can be accessed by Data 360’s unstructured processing pipelines. Additionally, Data 360 facilitates the abstraction of Salesforce objects and data access for data federated from external sources, enabling access to such data across the Salesforce platform and applications.

Data 360 integrates a CDP that features advanced identity resolution capabilities, creating unified individual identifiers and profiles along with comprehensive engagement histories. This platform is adept at handling both business-to-business (B2B) and business-to-consumer (B2C) frameworks by supporting identity graphs that utilize both exact and fuzzy matching rules. These identity graphs are enriched with engagement data from various channels, which helps in building detailed profile graphs with valuable analytical insights and segments.

Additionally, the CDP enables effective segmentation and activation across different platforms such as Salesforce’s Marketing Cloud, Facebook, and Google. It processes customer profiles in batch, near real-time, and real-time, which allows for immediate decision-making and personalization. This functionality enhances interactions in both B2C and B2B scenarios, ensuring that businesses can respond swiftly and accurately to customer needs and behaviors.

Data 360 offers an enterprise data graph in JSON format, which is a denormalized object derived from various lakehouse tables and their interrelationships. This includes a "Profile" data graph created by CDP that encompasses a person’s purchase and browsing history, case history, product usage, and other calculated insights, and is extensible by customers and partners. These data graphs are tailored to specific applications and enhance generative AI prompt accuracy by providing relevant customer or user context.

Additionally, plans are in place to expand these data graphs to include knowledge graphs that capture and model derived knowledge, such as extracted entities and relationships from unstructured data. The real-time layer of Data 360 utilizes the Profile graph for real-time personalization and segmentation.

Data 360’s real-time layer is engineered to process events such as web and mobile clickstreams, visits, cart data, and checkouts at millisecond latencies, enhancing customer experience personalization. It continuously monitors customer engagement and updates the customer profile from CDP with real-time engagement data, segments, and calculations for immediate personalization.

For example, when a consumer purchases an item on a shopping website, the real-time layer quickly detects and ingests this event, identifies the consumer, and enriches their profile with updated lifetime spend information. This allows for the personalization of their experience on the site in sub-seconds. Additionally, this layer includes capabilities for real-time triggering and responses, enabling immediate actions based on customer interactions.

Personalization is knowing which persona to target, when and where to deliver relevant content and recommendations, what to say and at what frequency. The Personalization Services Platform capability of Data 360 is the orchestrator of which decisions are made to optimize goal achievement through personalized experiences. This platform provides the following capabilities:

- Consistent set of models and ways to interpret profile, activity, and asset data in Data 360.

- Platform-integrated experimentation (for example, A/B/n or multi-arm bandit decision making).

- Integration of goals at design time via configuration, ML training time and runtime (ML inference).

- B2C scale, real-time and batch interaction support (anonymous users, high-volume real-time/interactive external, high-volume internal batch).

- Analytics driven through Data 360.

- Patterns to integrate AI models and service from other parties (both internal and external).

- OOTB implementations of high-value AI-driven use cases (recommendations and decisions with various ML algorithms, including contextual bandits for promotion/content selection, product recommendations and pricing decisions).

Data 360 is an active platform that supports the activation of pipelines in response to data events. For instance, a significant event, such as a drop in a customer’s account balance can trigger a Salesforce Flow to orchestrate a corresponding action. Similarly, updates to key metrics, such as lifetime spend, can be automatically propagated to relevant applications.

Data 360 features elastic scaling compute clusters that efficiently handle processing tasks. It offers robust management for both multitenant and dedicated compute environments. Additionally, it provides managed support for Spark and SQL. BYOC (Bring Your Own Compute/Code) features support multiple programming languages, including Java, Python, and Spark, allowing for the integration of custom transforms, models (including LLMs), and functions, enhancing extensibility.

Data 360 Compute Fabric provides a unified layer known as Data Processing Controller (DPC) for managing and executing all big data workloads. DPC is a comprehensive, multi-workload data processing orchestration service that provides Job-as-a-Service (JaaS) capabilities across diverse cloud compute environments. It abstracts infrastructure complexity and unifies job execution for frameworks like Spark (EMR on EC2 and EMR on EKS) and Kubernetes Resource Controller (KRC) workloads. By serving as a centralized control plane gateway, DPC orchestrates, schedules and monitors jobs across multiple data planes, ensuring reliability, scalability, cost efficiency and a consistent developer experience.

Data 360’s Query Service provides advanced querying capabilities, featuring extensive SQL support for both structured and unstructured data via Trino and Hyper. It enhances functionality with operator extensibility through Table Functions, allowing for diverse search operations across text, image, spatial, and other unstructured data types. These capabilities are seamlessly integrated with relational operations, such as selecting customer records. This unified approach enables the generation of targeted and personalized results, facilitating more precise LLM responses using RAG.

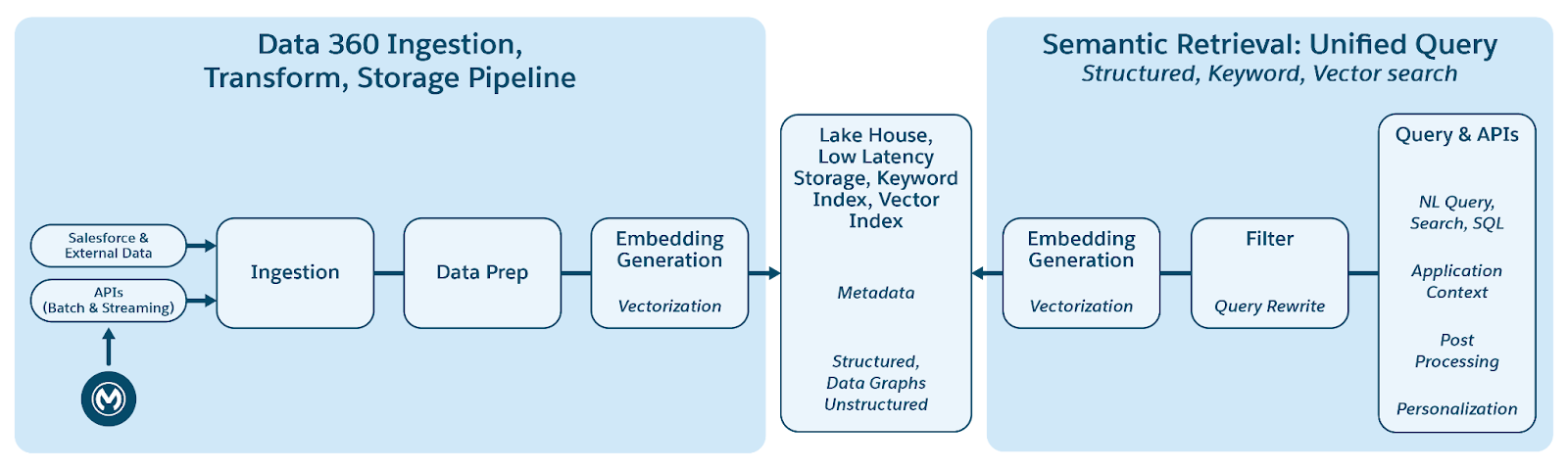

Data 360 supports storage and management of structured (tables), semi-structured (JSON), and unstructured data seamlessly across data ingestion, processing, indexing, and query mechanisms. Data 360 supports various unstructured data types beyond text, including audio, video, and images, broadening the scope of data handling and analysis. The figure below illustrates the two sides of grounding (ingestion and retrieval).

Data 360 manages unstructured data by storing it in columns as text or in files for larger datasets. It supports data federation for unstructured content, which allows for the integration and management of data from multiple sources.

Data 360’s unstructured data indexing pipeline is designed as a modular, extensible architecture comprising five core stages: Parsing, Pre-Processing, Chunking, Post-Processing and Embedding. These stages are then followed by keyword and vector indexing. Examples of Pre-Processing include operations such as noise elimination, language normalization, and image understanding (Optical Character Recognition), while Post-Processing stages may include metadata enrichment, semantic grouping, or advanced techniques such as chunking.

Data 360 provides multiple out-of-the-box and pluggable models for chunking and embedding generation. Data pipelines in Data 360 fully support code extensions, allowing customers and internal teams to plug in custom logic at any stage. These stages also support LLM-based processing, which allows customers to define their own prompts as needed.

For indexing, Data 360 supports keyword indexing utilizing search services, and vector indexing utilizing Milvus; an open-source native vector index. For setting up RAG with unstructured processing, Data 360 leverages context indexing to enable rapid iteration and quick validation utilizing sample test queries, with persona-specific content configured to tailor to the consuming persona or user.

The Document AI capability of Data 360 supports reading and importing unstructured or semi-structured data from documents like invoices, resumes, lab reports, and purchase orders. This feature supports ad-hoc interactive processing, as well as bulk batch processing. This is a key capability that enables business process automation for our customers.

Data 360 features a headless Semantic Layer with APIs designed to enhance business semantics and AI/ML-driven analytics, similar to Tableau Next. This layer includes a semantic data modeling service that enriches traditional analytical models with business taxonomy such as measures and metrics.

Its semantic query service utilizes a declarative language to interact with these models, translating queries into SQL for accessing data from both native and federated data sources within Data 360.

This integration facilitates scalable and interactive analytical explorations, reports, and dashboards, compatible with third-party visualization tools.

Data 360 functions as a centralized governance hub, ensuring that all data, from raw ingestion to activated insights, is managed with integrity and control. Data 360 has adopted Attribute-Based Access Control as its core authorization model. ABAC allows access decisions to be based on attributes of the user (department, role, location), data (personal information, sensitivity, data space), and environment (for example, time of day), rather than predefined roles. This enables highly granular and contextual access policies that adapt as data and user attributes change. At the heart of Data 360's ABAC implementation is the use of the CEDAR policy language. This purpose-built, open-source, formal policy language provides a precise and verifiable way to define complex authorization rules, ensuring that policies are unambiguous and can be evaluated consistently at scale.

The governance lifecycle includes key capabilities regarding policy information, enforcement and decision points:

- Tagging and Classification (Policy Information Point): Data is identified and enriched with critical attributes. Data 360 provides automated tagging and classification mechanisms, leveraging discovery, LLMs, and machine learning to identify sensitive data categories (for example, personally identifiable information such as email, phone, name) in both structured and unstructured data.

- Authorization Service (Policy Enforcement Point): This service intercepts all data access requests from various consumption layers (hybrid structured/unstructured queries, RAG retrievers & prompts, and CRM enrichment) and consults the Policy Decision Point to determine if access is permitted.

- Policy Evaluation Engine (Policy Decision Point): This engine takes the access request context from the Policy Enforcement Point, along with policy definitions (in CEDAR), and attributes from the Policy Information Point, to make an authoritative access decision.

The ABAC framework with CEDAR policies provides control and flexibility, ensuring that customer data is not only actionable but also secure, compliant, and trustworthy across the enterprise.

Caches are essential for fast access to frequently used data. Salesforce uses many caches across the Salesforce Platform, including in Core Application Servers, SalesforceDB, and at the Edge. The Salesforce Platform and applications need a scalable, tenant-aware caching solution with low latency and high throughput. This solution must allow Salesforce engineers to control what’s cached and for how long, ensuring that their data is not evicted by system noise or other customers’ data. Vegacache, a Salesforce-managed caching service based on Redis, is tailored for a polyglot, multitenant, and public cloud environment. It’s widely used by Salesforce services and accessible to platform developers via Apex programming language APIs. Operating at scale in Hyperforce, as of writing, Vegacache handles over 2 trillion requests daily with sub-millisecond response times.

Vegacache instances, running in Kubernetes containers accessed via Service Mesh, are deployed across multiple Availability Zones to balance data availability and latency. It scales automatically based on system load, ensuring data availability and slot ordering preservation. Vegacache provides guaranteed cache size per customer and offers protection against noisy neighbors, with resilience against infrastructure failures through replicated data storage.

For the Salesforce Platform developers, Vegacache makes it possible for developers to cache Apex Objects and SOQL database query results, reducing CPU usage and latency by eliminating unnecessary data fetches from SalesforceDB. It supports Put(), Get(), and Delete() operations, keeping frequently used objects readily accessible.

Salesforce natively supports asynchronous data processes and architectures for enhanced workflow flexibility, resiliency, and scalability.

Salesforce engineers first leveraged message queues to decouple bulk and large data processes, as well as coordinate processes between independent systems. These message queues were abstracted from the external developer via platform features, like Bulk API queries or Asynchronous Apex. The Salesforce Platform then introduced log-organized event streams built on a robust messaging infrastructure of internally managed Apache Kafka clusters. This enabled an event-based architecture with a publish/subscribe interaction model, and was productized to external developers as Platform Events.

Both message queues and event streams continue to be highly-leveraged technologies of apps and solutions built on the platform, especially as they leverage more features, clouds, and external systems hosted on independent runtimes. Communicating via versioned event schemas enables independent software development lifecycles for the different runtimes. The decoupling of systems via events also helps manage load spikes and elasticity/scale of individual runtimes to support a higher overall resiliency and availability of an app.

Search features at Salesforce, crucial for applications ranging from global search to generative AI, face unique challenges that shape our architectural approach:

- Scale: Supporting hundreds of thousands of customers and millions of tenants, our cloud-native search solution is designed for massive scale while remaining cost-effective.

- Customer Diversity: Salesforce’s diverse customer base across various industries has unique and complex search requirements due to extensive customization of the platform, involving numerous object types and fields.

- Operability: The search solution must be resilient and highly available, supporting data residency, tenant lifecycle operations like regional migrations, and sandboxing, and maintain low-indexing latency with fairness between tenants.

- Relevance at Scale: Enhancing the relevance of search results to meet diverse user queries is critical, especially as we scale relevance algorithms to accommodate various tenants, data types, and search scenarios.

- AI and Semantic Capabilities: Search supports Machine Learning and generative AI, particularly for Retrieval-Augmented Generation (RAG) and Agentic Search.

- Seamless Integration: To ensure a cohesive user experience, Salesforce’s search technology integrates deeply with the broader Salesforce Platform, including metadata models and AI/data services.

Salesforce’s cloud-native solution, SeaS (Search as a Service), is built on Solr, an open-source distributed search engine. Salesforce has significantly extended and optimized Solr to meet our unique challenges and has integrated it deeply with Salesforce applications and platform, incorporating semantic technologies to enhance AI applications and search relevance.

SeaS employs a compute/storage separation architecture, allowing for scalable distribution of indexes across nodes and rebalancing loads and availability across zones during failures. It features automatic sharding and resizing of shards, zero-downtime upgrades, and optimizations like replica lazy loading and archiving to cater to rarely used indexes.

The architecture also includes a low-level index implementation optimized for a large number of fields, auto-complete, spell-correction, and bring-your-own-key encryption. Managing around 6,000 Solr nodes globally, Hyperforce search infrastructure uses multiple independent clusters (Hyperforce cells) in each region to balance cost and control, automatically placing client indexes based on load, domain, and type.

Salesforce’s search relevance pipeline employs learning-to-rank techniques, adapting to the diverse needs of our customers and supporting features such as result ranking. It also includes entity predictions from user queries and past interactions. Relevance models are continuously refined by learning from user interactions and evaluated through A/B testing, enhancing search result accuracy. This process also supports bootstrapping models for AI applications via knowledge transfer.

The stack incorporates a vector search engine for semantic search and AI applications, integrated with Data 360 for generative AI capabilities. This includes a comprehensive pipeline for data transformation, hybrid search support, a catalog of configurable rankers, such as the Deep Fusion Ranker and Autodrop to filter out low-relevance search results.

As generative AI is shifting the primary consumer of search services from human users to use LLMs, the Salesforce search stack is adapting to find and return results optimized for this programmatic consumption, dealing with longer and more complex queries and returning more descriptive results like chunks. This supports new Agentic Search capabilities, where Agentforce agents leverage search with a reasoning loop to accomplish complex tasks.

Salesforce’s search features span various contexts, including global search, lookups, search answers, community search, related lists, setup, mobile and generative AI applications. This broad functionality is achieved through tight integration of the Search stack with Salesforce’s metadata system and UI ecosystem, enabling seamless support for both standard and custom objects.

Additionally, integration with Data 360 enhances search capabilities on data objects through no-code configurations and allows for the composition of search functions within data pipelines, such as including search statements in SQL queries. The Search stack leverages Data 360 rich connectors ecosystem, like the Google Drive permission-aware connector, to provide a complete enterprise search capabilities. The integration extends to the AI Platform, enabling search queries to be used as retrievers in Prompt Builder for grounding and in Agentic Search.

AI has reshaped the technology landscape, and the Salesforce Platform, with its integrated and rich data layer, positions Salesforce to deliver impactful AI experiences to customers. Salesforce began its AI transformation nearly a decade ago and has led the field since 2013, focusing on research, ethics, and product development to empower businesses to solve complex problems and drive growth.

Leveraging the core value of Innovation, Salesforce introduced Einstein Predictive AI, enabling businesses to analyze data, automate processes, understand customers, and optimize operations with a comprehensive suite of AI-powered tools such as Einstein Prediction Builder and AI bots. With the rise of Generative AI, Salesforce launched Agentforce, a platform that merges predictive and generative models to offer advanced AI capabilities while prioritizing data privacy.

With the most recent launch of Agentforce 3.0, built on Python with an event-driven framework, Salesforce introduces enhanced flexibility through features like built-in conversation history, end-to-end session tracing, voice support, and custom reasoning engine (Bring Your Own Planner) functionality, enabling more scalable, customizable, and intelligent multi-agent systems.

Agentforce follows these core principles:

- Data Security and Ethics: Prioritizes data protection, compliance, and ethical AI principles.

- Transparency and Explainability: Offers clear understanding and validation of AI-generated outputs.

- Flexibility and Customization: Tailors AI applications to specific needs and industries.

- Seamless Integration: Integrates with Salesforce CRM and other systems.

- Scalability: Handles large-scale deployments and delivers real-time AI experiences.

- Intelligent and Consistent Experiences: Provides personalized, augmented, and automated experiences through connected data and contextual understanding.

- Comprehensive Observability: Provides deep visibility and monitoring into AI agent interactions to enable proactive optimization and fine-tuning of agents using Agentforce Interaction Explorer.

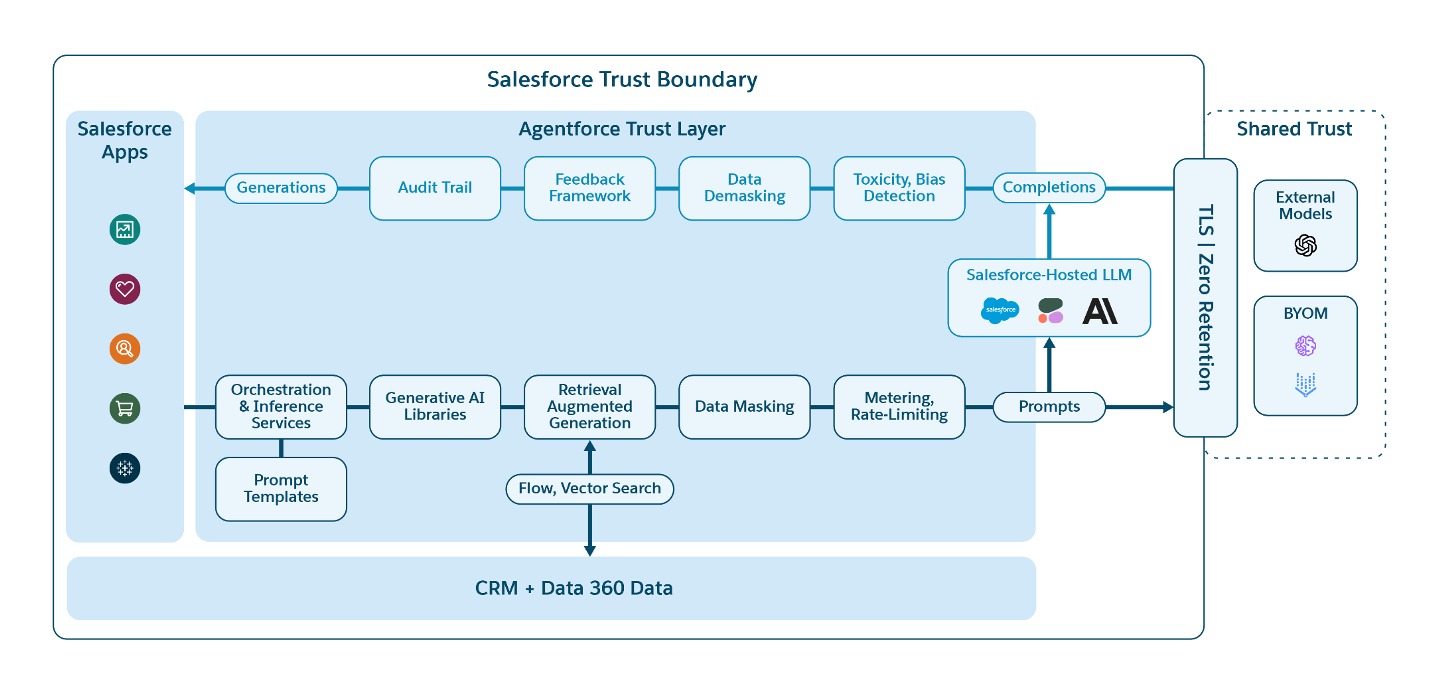

The AI stack consists of several key components:

- AI Platform: This platform layer is responsible for managing, training, and fine-tuning AI models used in both predictive and generative applications. It offers out-of-the-box (OOTB) services, trust services and foundational models for training, testing, and performing inference on models. Additionally, it supports the integration of your own predictive and generative models, allowing you to bring custom models within the platform.

- AI Foundational Services: This includes the AI Gateway, Feedback Framework, RAG, Agentic Orchestration, Agent Evaluation and Reasoning services, which facilitate the integration of business applications with the AI stack.